The invention of Neural Radiance Field (NeRF) algorithm have reignited the spark for 3D object synthesis algorithm for many researchers. Thanks to the renew interested researchers have developed many alternative version of the NeRF algorithm that improves upon the original algorithm in many aspect. One of the notable one was Instant-Ngp which as ability to synthesize the scene under 1 minute albeit at a low framerate which I have also covered that algorithm in another blog post.

This popularity of the algorithm have also incentivized researchers for inventing new algorithm that performs object synthesis with different methods that can result in better resolution or faster synthesize time or even both. One of the recently emerged such algorithm is the 3D Gaussian Splatting algorithm.

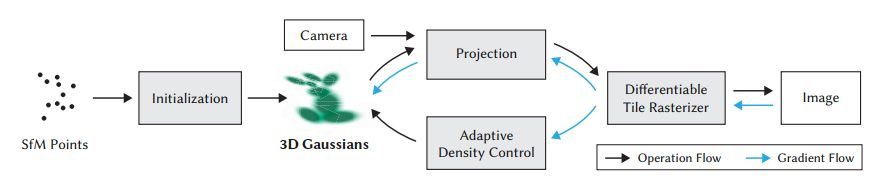

3D Gaussian Splatting Algorithm is 3D object synthesizing algorithm that uses 3D Gaussian representations instead of ray marching that is used in NeRF based algorithms. This allows competitive state of the art visual quality with acceptable training times (around 10 minutes with Nvidia T4). The algorithm is made up of three fundamental components. Those are 3D Gaussian representation of the scene, an anisotropic splatting method for rendering the radiance field, and a GPU sorting algorithm for fast and accurate backward passes

The 3D Gaussian scene representation represents the radiance field as a set of anisotropic 3D Gaussians. These Gaussians are optimized for their 3D position, opacity, anisotropic covariance, and spherical harmonic coefficients, and their density is adaptively controlled during optimization. This produces a reasonably compact, unstructured, and precise representation of the scene. The advantages of this representation include its ability to compactly represent complex geometry, its high-quality rendering results, and its ability to handle complete, complex scenes including background, both indoors and outdoors and with large depth complexity.

Anisotropic splatting method is a rendering technique that involves projecting 3D Gaussians onto 2D splats and blending them together to produce a final image. The anisotropic splats have different sizes and shapes in different directions, that allows them to capture the geometry and lighting of the scene better. The blending of the splats is done using an alpha-blending technique that takes into account the visibility of each splat, which is determined using a GPU sorting algorithm. This allows for fast and accurate back-propagation during training, which are necessary for optimizing the 3D Gaussians to synthesize high quality scenes or objects .In addition, The anisotropic splatting is visibility-aware meaning that it takes into account the occlusion of each splat by other objects in the scene. This results in high-quality rendering of complex scenes with large depth complexity, both indoors and outdoors.

Compared to some of the state of the art NeRF algorithms such as Plenoxels or Mip-NeRF; 3D Gaussian Splatting is magnitudes of faster. This is possible due to a similar technique used in Instant-NGP called GPU sorting algorithm. GPU enables fast and accurate back-propagation during training. Back-propagations are required for optimizing the 3D Gaussians used to represent the scene, and they are used to compute the gradients of the radiance field with respect to the Gaussian parameters. This requires sorting the Gaussians in depth order, which can be computationally expensive. To address this issue, the algorithm uses a GPU sorting algorithm based on radix sort, which is highly parallelizable and can sort large numbers of Gaussians in a fraction of a second. The sorting is done once per frame at the beginning of the rendering process, and the resulting sorted list of Gaussians is used to efficiently produce per-tile lists of Gaussians to process during the forward pass. This eliminates sequential primitive processing steps and produces more compact per-tile lists to traverse during the forward pass, resulting in faster rendering times. In short, GPU sorting enables real-time rendering of high-quality radiance fields.

Utilization Guide

Similar to Instant-NGP 3D Gaussian is also requires many images of the same scene or the object from different angles and their respected camera variables. Luckily both requirements can be met by end users. To create a data set simply take a video of the object or the scene covering it from a wide angle of visibility. To keep the amount of frame limited videos ideally should not be longer than 5 seconds.

Then use a python script to extract frames from the video an example is given below:

# Open the video file

video_path = 'vid1.mp4'

cap = cv2.VideoCapture(video_path)

# Check if the video file was opened successfully

if not cap.isOpened():

print("Error: Could not open video file.")

exit()

frame_count = 0

# Create a directory to save the frames

output_directory = 'images'

os.makedirs(output_directory, exist_ok=True)

# Loop through the video frames and save them as images

while True:

ret, frame = cap.read()

#frame = cv2.flip(frame, 0) #Use this line if frames are flipped upside down

if not ret:

break

# Save the frame as an image

frame_filename = f"{output_directory}/frame_{frame_count:04d}.jpg"

cv2.imwrite(frame_filename, frame)

frame_count += 1

# Release the video capture object

cap.release()

print(f"Extracted {frame_count} frames.")Once the images have formed put them under a folder called input.

Then camera parameters needed to be estimated from images. To achieve this install the Structure from Motion (SfM) algorithm. This tutorial shows how to install SfM algorithm starting from minute 10:

Once SfM is successfully installed; save the following python script as “convert.py”.

#

# Copyright (C) 2023, Inria

# GRAPHDECO research group, https://team.inria.fr/graphdeco

# All rights reserved.

#

# This software is free for non-commercial, research and evaluation use

# under the terms of the LICENSE.md file.

#

# For inquiries contact george.drettakis@inria.fr

#

import os

import logging

from argparse import ArgumentParser

import shutil

# This Python script is based on the shell converter script provided in the MipNerF 360 repository.

parser = ArgumentParser("Colmap converter")

parser.add_argument("--no_gpu", action='store_true')

parser.add_argument("--skip_matching", action='store_true')

parser.add_argument("--source_path", "-s", required=True, type=str)

parser.add_argument("--camera", default="OPENCV", type=str)

parser.add_argument("--colmap_executable", default="", type=str)

parser.add_argument("--resize", action="store_true")

parser.add_argument("--magick_executable", default="", type=str)

args = parser.parse_args()

colmap_command = '"{}"'.format(args.colmap_executable) if len(args.colmap_executable) > 0 else "colmap"

magick_command = '"{}"'.format(args.magick_executable) if len(args.magick_executable) > 0 else "magick"

use_gpu = 1 if not args.no_gpu else 0

if not args.skip_matching:

os.makedirs(args.source_path + "/distorted/sparse", exist_ok=True)

## Feature extraction

feat_extracton_cmd = colmap_command + " feature_extractor "\

"--database_path " + args.source_path + "/distorted/database.db \

--image_path " + args.source_path + "/input \

--ImageReader.single_camera 1 \

--ImageReader.camera_model " + args.camera + " \

--SiftExtraction.use_gpu " + str(use_gpu)

exit_code = os.system(feat_extracton_cmd)

if exit_code != 0:

logging.error(f"Feature extraction failed with code {exit_code}. Exiting.")

exit(exit_code)

## Feature matching

feat_matching_cmd = colmap_command + " exhaustive_matcher \

--database_path " + args.source_path + "/distorted/database.db \

--SiftMatching.use_gpu " + str(use_gpu)

exit_code = os.system(feat_matching_cmd)

if exit_code != 0:

logging.error(f"Feature matching failed with code {exit_code}. Exiting.")

exit(exit_code)

### Bundle adjustment

# The default Mapper tolerance is unnecessarily large,

# decreasing it speeds up bundle adjustment steps.

mapper_cmd = (colmap_command + " mapper \

--database_path " + args.source_path + "/distorted/database.db \

--image_path " + args.source_path + "/input \

--output_path " + args.source_path + "/distorted/sparse \

--Mapper.ba_global_function_tolerance=0.000001")

exit_code = os.system(mapper_cmd)

if exit_code != 0:

logging.error(f"Mapper failed with code {exit_code}. Exiting.")

exit(exit_code)

### Image undistortion

## We need to undistort our images into ideal pinhole intrinsics.

img_undist_cmd = (colmap_command + " image_undistorter \

--image_path " + args.source_path + "/input \

--input_path " + args.source_path + "/distorted/sparse/0 \

--output_path " + args.source_path + "\

--output_type COLMAP")

exit_code = os.system(img_undist_cmd)

if exit_code != 0:

logging.error(f"Mapper failed with code {exit_code}. Exiting.")

exit(exit_code)

files = os.listdir(args.source_path + "/sparse")

os.makedirs(args.source_path + "/sparse/0", exist_ok=True)

# Copy each file from the source directory to the destination directory

for file in files:

if file == '0':

continue

source_file = os.path.join(args.source_path, "sparse", file)

destination_file = os.path.join(args.source_path, "sparse", "0", file)

shutil.move(source_file, destination_file)

if(args.resize):

print("Copying and resizing...")

# Resize images.

os.makedirs(args.source_path + "/images_2", exist_ok=True)

os.makedirs(args.source_path + "/images_4", exist_ok=True)

os.makedirs(args.source_path + "/images_8", exist_ok=True)

# Get the list of files in the source directory

files = os.listdir(args.source_path + "/images")

# Copy each file from the source directory to the destination directory

for file in files:

source_file = os.path.join(args.source_path, "images", file)

destination_file = os.path.join(args.source_path, "images_2", file)

shutil.copy2(source_file, destination_file)

exit_code = os.system(magick_command + " mogrify -resize 50% " + destination_file)

if exit_code != 0:

logging.error(f"50% resize failed with code {exit_code}. Exiting.")

exit(exit_code)

destination_file = os.path.join(args.source_path, "images_4", file)

shutil.copy2(source_file, destination_file)

exit_code = os.system(magick_command + " mogrify -resize 25% " + destination_file)

if exit_code != 0:

logging.error(f"25% resize failed with code {exit_code}. Exiting.")

exit(exit_code)

destination_file = os.path.join(args.source_path, "images_8", file)

shutil.copy2(source_file, destination_file)

exit_code = os.system(magick_command + " mogrify -resize 12.5% " + destination_file)

if exit_code != 0:

logging.error(f"12.5% resize failed with code {exit_code}. Exiting.")

exit(exit_code)

print("Done.")By running this script with the following command we can estimate camera parameters:

python convert.py -s <location> Once camera parameters have been estimated your data set folder containing both images and their estimated camera parameters should look like this:

After this step run the following Google Colab notebook and upload your data set folder:

https://colab.research.google.com/drive/1ImYGQNkURHEdbnpqJ3TVkotLrvyh-uY3#scrollTo=kgRO6lQmMKyZ

This Google Colab notebook that I have created contains all the necessary instruction for how to synthesizing the object ( in form of .ply) and how to interactively view it. Please note that this Colab notebook requires a GPU to run.

An example .ply file can be found below:

If you want to install the algorithm in your own machine you can find github repository in the following link:

https://github.com/graphdeco-inria/gaussian-splatting/tree/main

If you want to install 3D Gaussian Splatting to a Windows based computer a tutorial is available at Youtube. You can find it in the following link: