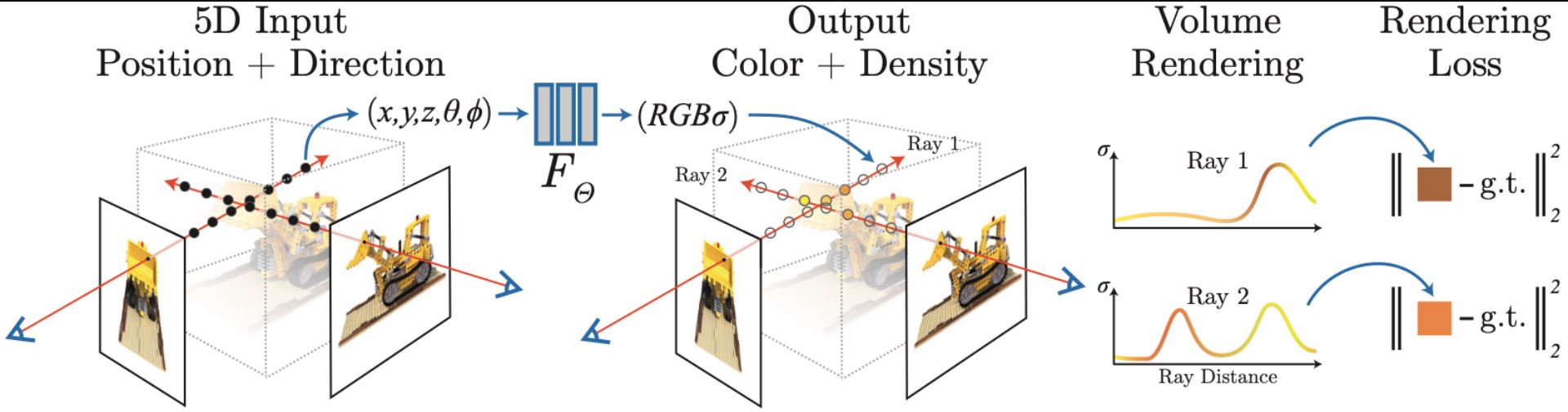

Neural Radiance Fields (NeRF) is a 3D object synthesis method that is designed to solve view synthesis problem from finite amount of 2D images. NeRF achieves this by defining synthesizing problems as 5-dimensional optimization problems. Those parameters are x,y,z coordinates of the each pixel values of in canonical space and radiance emittance obtained from camera parameters. Scenes are rendered by regressing this function to optimize for a single volume density and view dependent color ((𝜎,r,g,b),respectively) using Multi Layer Perceptron.

While end result is the state of the art It will take hours even days to form a single scene or an object. To address this problem an optimization method called Neural Graphic Primaries (Instant-NGP) is developed. Instant-NGP uses multi-resolution hash tables to represent the functions formed with MLP. This will allow very fast update of the weight values of the neurons inside MLP compared to backpropagation.

With Instant-NGP it is possible to form an object or a scene in couple of minutes in average or even under a minute with a fast enough Nvidia GPU. What is more it would require only a short few seconds of video of an object in question to form the scene. So by using a smart phone and an Nvidia GPU one can synthesize 3-D object or a scene of any sort in less than 5 minutes.

In this medium blog we are going to explore as to how this will be performed step by step on a windows machine.

Hardware Requirements

Instant-NGP requires an Nvidia GPU with tensor cores to function. Required Cuda Toolkit version is 11.6 or higher. In addition minimum of 6GB of Vram is required but ideally 10GB or higher is recommended.

Installation Guide

Start Installation by cloning the Github repository from the following link:

https://github.com/bycloudai/instant-ngp-Windows

Github page contains the required step needed for installation. Furthermore there is a Youtube video explaining in detail all of the installation procedures. It can be accessed from the following link:

Utilization Guide

As mentioned earlier it is possible synthesize 3-D objects or scenes using videos even from your smart phones:

1) First create a short small video of the scene. An ideal length is about 5 seconds. Please note that the longer videos and more frames would require more computational time on GPU side

2) Convert the video you have created into a set of images. It is very trivial to this with python and Opencv. An example code is provided below for this task:

# Open the video file

video_path = 'vid1.mp4'

cap = cv2.VideoCapture(video_path)

# Check if the video file was opened successfully

if not cap.isOpened():

print("Error: Could not open video file.")

exit()

frame_count = 0

# Create a directory to save the frames

output_directory = 'images'

os.makedirs(output_directory, exist_ok=True)

# Loop through the video frames and save them as images

while True:

ret, frame = cap.read()

#frame = cv2.flip(frame, 0) #Use this line if frames are flipped upside down

if not ret:

break

# Save the frame as an image

frame_filename = f"{output_directory}/frame_{frame_count:04d}.jpg"

cv2.imwrite(frame_filename, frame)

frame_count += 1

# Release the video capture object

cap.release()

print(f"Extracted {frame_count} frames.")

3) Once images have been generated camera parameters needed to be extracted. For this step you can use the COLMAP algorithm that comes with the repository. For best result use exhaustive matching parameter as “exhaustive”. For the fastest result, use sequential matching parameter as “sequential”. For file directory select the path where images folder exists. An example code is given below:

Python C:\Users\Gurcan\Documents\Tutorial\ngp\instant-ngp\scripts/colmap2nerf.py --colmap_matcher exhaustive --run_colmap --aabb_scale 16 --images C:\Users\Gurcan\Documents\Tutorial\ngp\instant-ngp\data\custom_fox4) After step 3 you should be able to have a “transforms.json” file. Inside this file modify the file paths using a text editor. File path of each images should be in form of : ” images/frame_0000.jpg”. Then move the “transforms.json” into the folder that contains the images folder. The folder structure should like this:

5) Once “transforms.json “ is created you can synthesizing operation. To do that first enter the path where testbed.exe is located then enter the file path of the data-set folder. Once command is properly formed object will be synthesized. A sample command is given below:

C:\Users\Gurcan\Documents\Tutorial\ngp\instant-ngp\build\testbed.exe --scene C:\Users\Gurcan\Documents\Tutorial\ngp\instant-ngp\data\nerf\foxConclusion

In this short tutorial I have explained what NeRF / Instant-NGP is. Then I have provided a short tutorial for utilizing them with a smart phone and an Nvidia-GPU. It is my hope that this tutorial will be beneficial for anyone willing to explore AI-based object synthesize task.