In this article, we will explore the decode of an image that has been encoded and obtained a 'latent' vector using different models and methods, and discuss the differences in success among these models.

Encoding

Encoding an image or obtaining a 'latent' vector is a technique commonly used in machine learning and deep learning models. This process involves transforming input data into a lower-dimensional, meaningful representation. These latent vectors are used in various areas such as data storage, dimensionality reduction, feature extraction, transfer learning, etc.

In this article, the aim is to encode images for the purpose of occupying less space in the database. However, this article will focus solely on the image encode-decode processes, without addressing the storage process.

To perform the encoding process, a model called the Variable Auto Encoder (VAE), used in image generation technology and referred to as 'Stable Diffusion,' will be employed. The VAE will be utilized to encode the images, and the low-dimensional latent vectors obtained from the encoded images will be analyzed and used later.

Using VAE, a 3-channel image with dimensions M, N (M, N, 3) will be transformed into a 4-channel latent vector with dimensions M/8, N/8, 4 (M/8, N/8, 4), making it 8 times smaller. This results in a compression ratio of 48. Subsequently, the compressed data can be stored in the desired database. The schematic diagram of this process is shown in Figure 1.

The code snippet below illustrates how the latent vector is obtained. In this text, image compression is achieved using the “diffusers” library. In this example, the 'latent' variable obtained from compressing an image of dimensions (1, 256, 256, 3) is a vector of size [1, 4, 64, 64], meaning its dimensions are 8 times smaller than the input image.

import torch

from diffusers import StableDiffusionPipeline

from consistencydecoder import ConsistencyDecoder, save_image, load_image

# encode with stable diffusion vae

pipe = StableDiffusionPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16, device="cuda:0"

)

pipe.vae.cuda()

decoder_consistency = ConsistencyDecoder(device="cuda:0") # Model size: 2.49 GB

image = load_image("image.png", size=(512, 512), center_crop=True)

latent = pipe.vae.encode(image.half().cuda()).latent_dist.meanDecoding

The image is restored to its original state by passing through the decoding process after obtaining and storing a latent vector through encoding in the database. However, this encoding and decoding process operates with loss, meaning the original image cannot be recovered exactly. In the continuation of this text, we will be examining the reconstruction success of two different decoding models.

We will reconstruct compressed images using the Stable Diffusion model, which we use to compress the image, and the "consistency decoder" models developed by the “OpenAI” company more recently. Thus, we will compare the success of the two models in the decoding process.

Firstly, the decoding process progresses as shown in the schema in Figure 2. The latent vector of the image is extracted, undergoes the decoding process, and the original form is obtained.

The code snippet below demonstrates how the decoding process is achieved with the model used during compression. The 'latent' variable obtained after encoding is decoded using this model, and the resulting new image is saved.

sample_stable_diffusion = pipe.vae.decode(latent).sample.detach()

save_image(sample_stable_diffusion, "stable_diff_decode_result.png")In the code snippet below, the decoding process is facilitated using the "consistency decoder" model. Similarly, the same "latent" variable is decoded with this model, and the resulting new image is saved.

sample_consistency = decoder_consistency(latent)

save_image(sample_consistency, "consistency_decode_result.png")

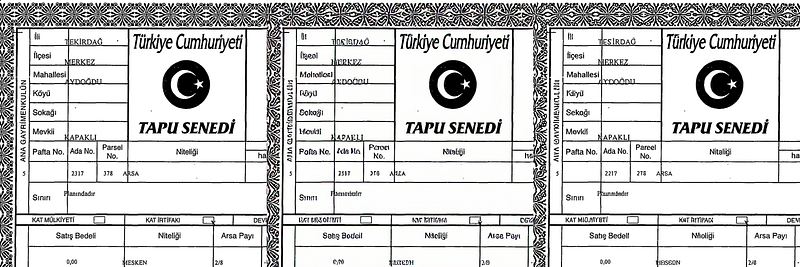

The original version of a sample image compressed in Figure 3, the result of decoding with the compressed model, and the result obtained with the consistency decoder are shown.

Conclusion

As mentioned before, when images go through the encode-decode process, distortions occur in the images. Current models and methods are being developed to minimize these distortions. In this article, a comparison is provided using the recently developed 'consistency decoder' model to address this issue.

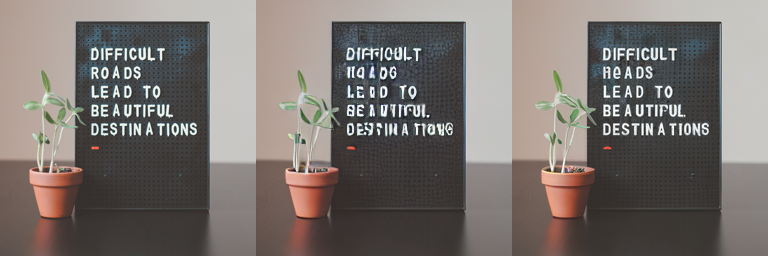

It is observed that there are distortions in the images after both decoding processes. However, the results obtained with the consistency decoder are closer to the original, and the text is more readable. To replicate the process with a different type, the same procedures were repeated for the example in Figure 4, and it was observed that this new model yielded more successful results in this example as well.

You can explore their GitHub pages and articles examining this model to learn more about the Consistency decoder and see application examples. Best of luck!